- git pull https://github.com/zhuby1973/python/blob/master/WordCount.java to hadoop VM

- add environment variables:

export PATH=${JAVA_HOME}/bin:${PATH}

export HADOOP_CLASSPATH=${JAVA_HOME}/lib/tools.jar - compile

$ hadoop com.sun.tools.javac.Main WordCount.java

$ jar cf wc.jar WordCount*.class - create input directory in HDFS

hdfs dfs -mkdir /wordcount

hdfs dfs -mkdir /wordcount/input

echo "Hello World Bye World" > file01

echo "Hello Hadoop Goodbye Hadoop" > file02

hadoop fs -put file0* /wordcount/input

hadoop fs -ls /wordcount/input

hadoop fs -cat /wordcount/input/file01 - edit "~/hadoop-3.1.3/etc/hadoop/yarn-site.xml" as below:

<configuration>

<property>

<name>mapreduceyarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce_shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

</configuration>- edit ~/hadoop-3.1.3/etc/hadoop/mapred-site.xml as below:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

</property>

</configuration>you need stop-all.sh and start-all.sh to restart hadoop after changes.

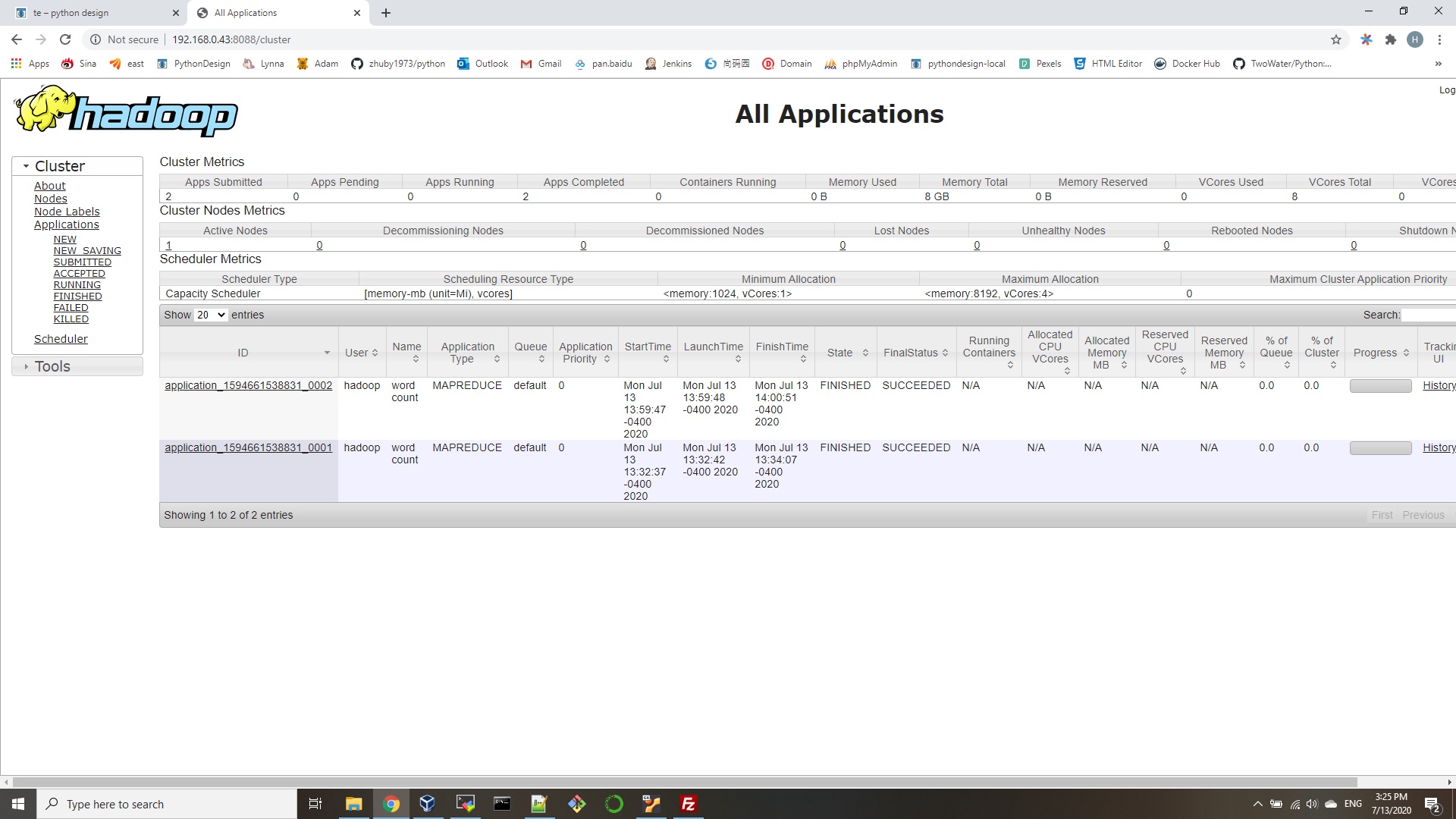

- Run the application:

$ hadoop jar wc.jar WordCount /wordcount/input /wordcount/output

verify the output:

hadoop@ubunu2004:~$ hadoop fs -ls /wordcount/output

Found 2 items

-rw-r–r– 1 hadoop supergroup 0 2020-07-13 13:34 /wordcount/output/_SUCCESS

-rw-r–r– 1 hadoop supergroup 41 2020-07-13 13:34 /wordcount/output/part-r-00000

you need delete /wordcount/output if you need run it again:

hadoop fs -rm -r -f /wordcount/output