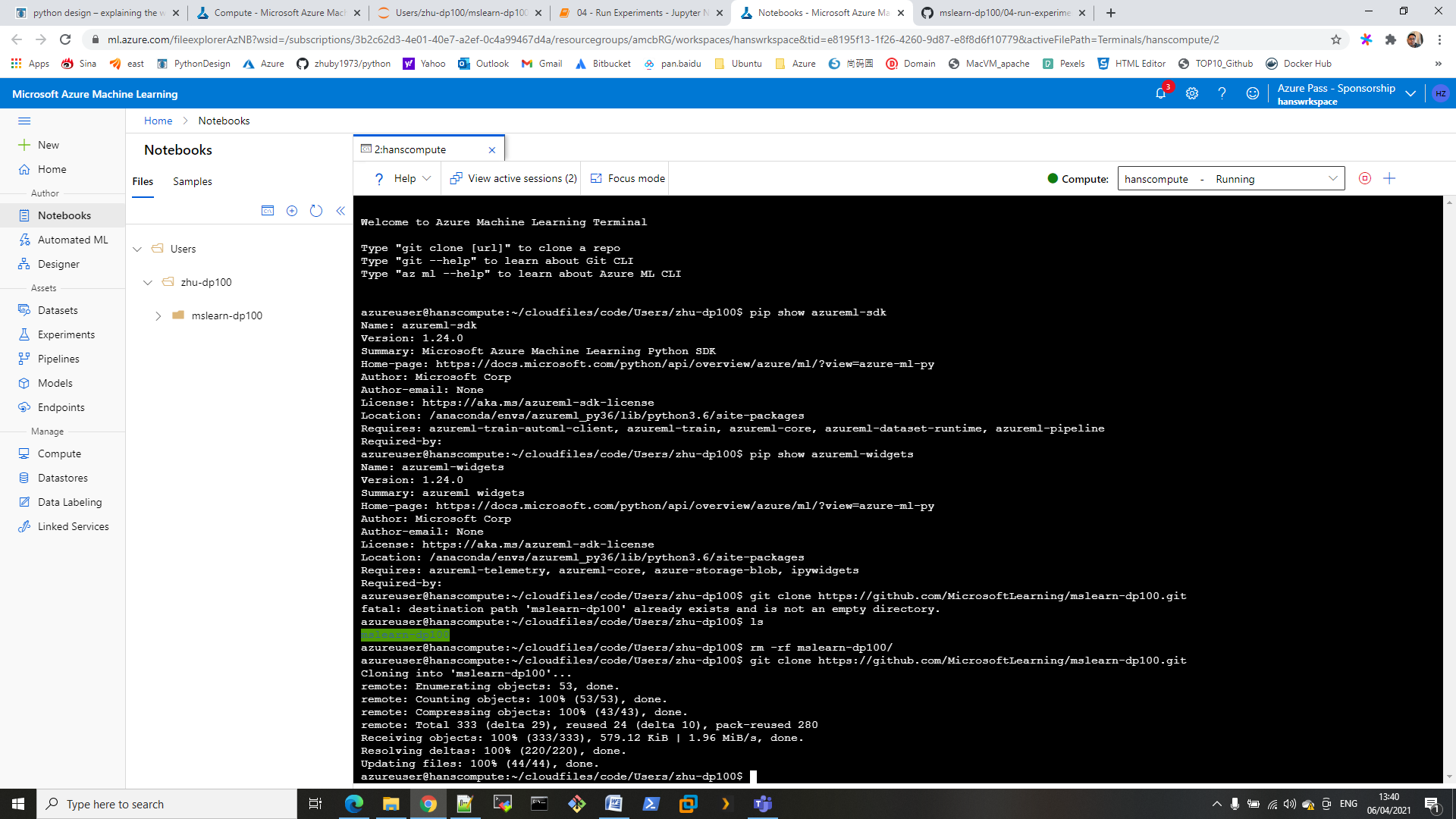

Step 1. Clone the repository

https://github.com/MicrosoftLearning/AI-102-AIEngineer

We will use 13-bot-framework\Python

Step 2. Create a bot based on the EchoBot template

pip install botbuilder-core

pip install asyncio

pip install aiohttp

pip install cookiecutter==1.7.0

cookiecutter https://github.com/microsoft/botbuilder-python/releases/download/Templates/echo.zip

when prompted by cookiecutter, enter the following details:

bot_name: TimeBot

bot_description: A bot for our times

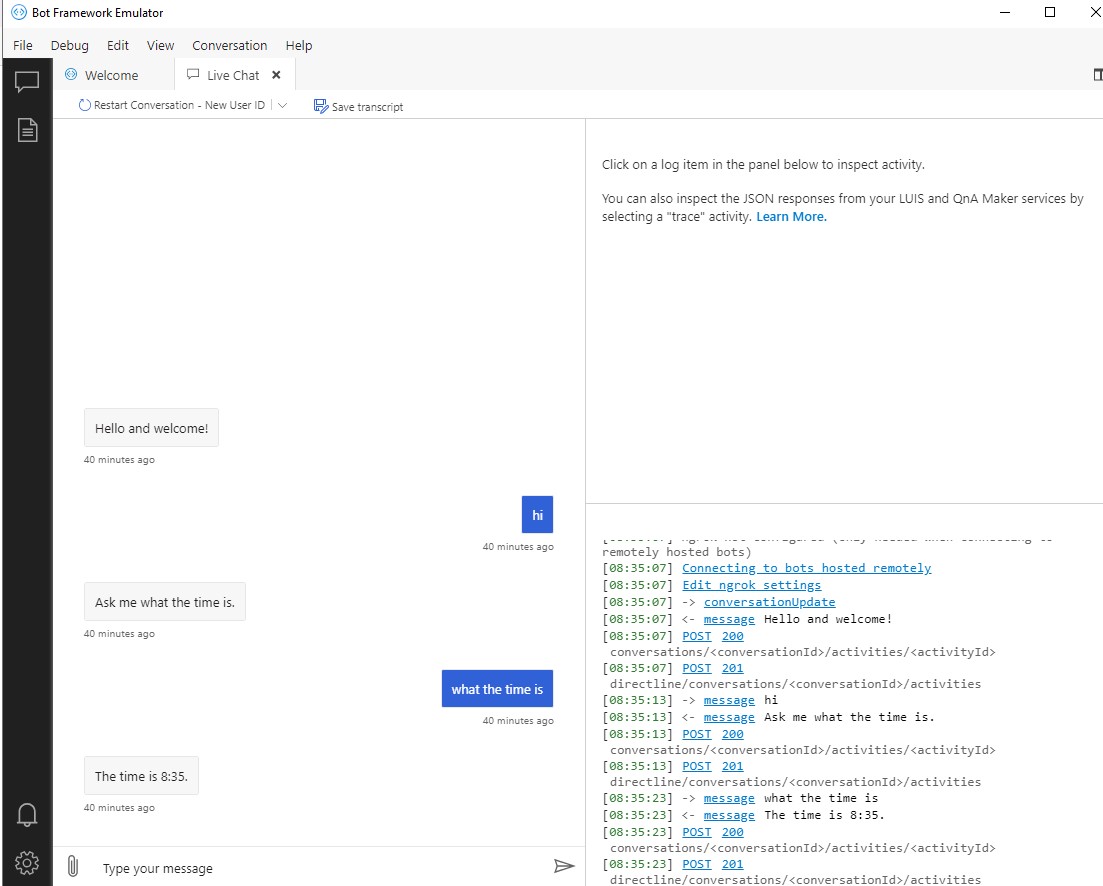

Step 3. Test the bot in the Bot Framework Emulator

(base) C:\Users\Student\hans\AI-102-AIEngineer\13-bot-framework\Python\TimeBot>python app.py ======== Running on http://localhost:3978 ======== (Press CTRL+C to quit)

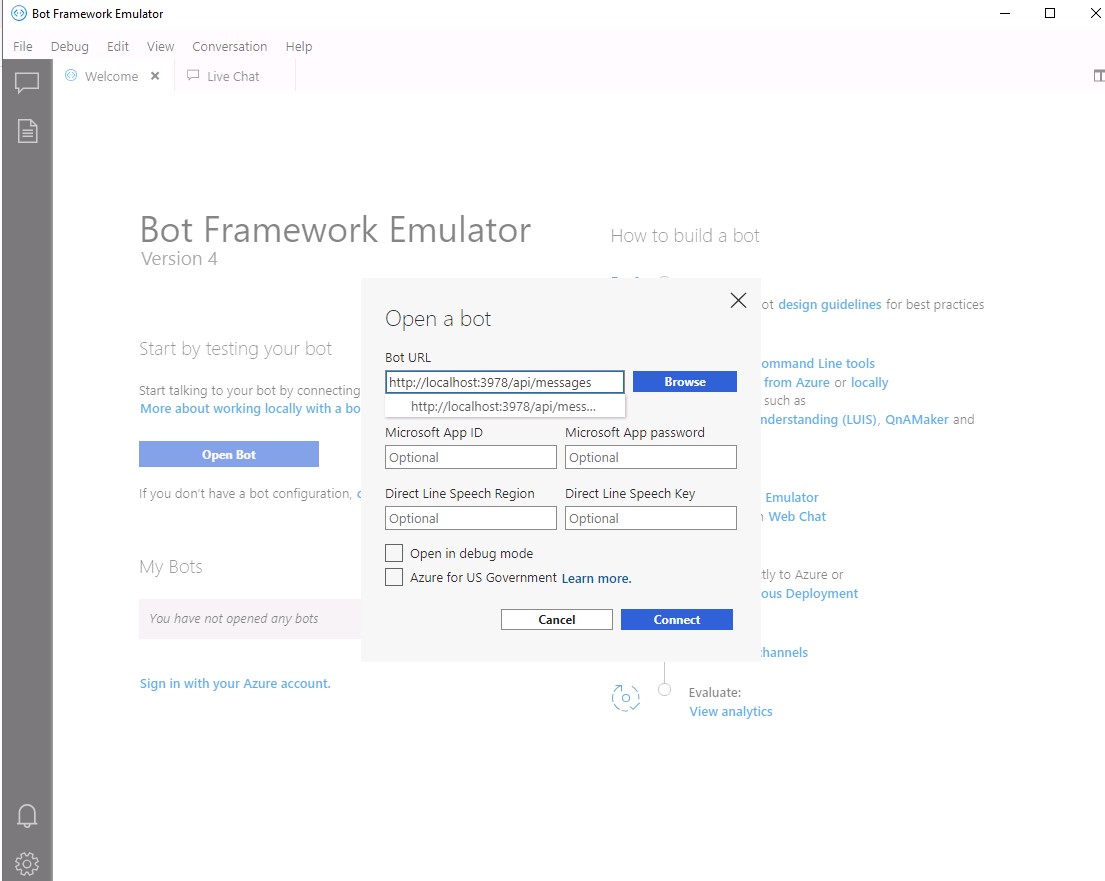

Start the Bot Framework Emulator, and open your bot by specifying the endpoint with the /api/messages path appended, like this: http://localhost:3978/api/messages

Step 4. Update bot.py

# Copyright (c) Microsoft Corporation. All rights reserved.

# Licensed under the MIT License.

from botbuilder.core import ActivityHandler, TurnContext

from botbuilder.schema import ChannelAccount

from datetime import datetime

class MyBot(ActivityHandler):

# See https://aka.ms/about-bot-activity-message to learn more about the message and other activity types.

async def on_message_activity(self, turn_context: TurnContext):

input_message = turn_context.activity.text

response_message = 'Ask me what the time is.'

if (input_message.lower().startswith('what') and 'time' in input_message.lower()):

now = datetime.now()

response_message = 'The time is {}:{:02d}.'.format(now.hour,now.minute)

await turn_context.send_activity(response_message)

async def on_members_added_activity(

self,

members_added: ChannelAccount,

turn_context: TurnContext

):

for member_added in members_added:

if member_added.id != turn_context.activity.recipient.id:

await turn_context.send_activity("Hello and welcome!")

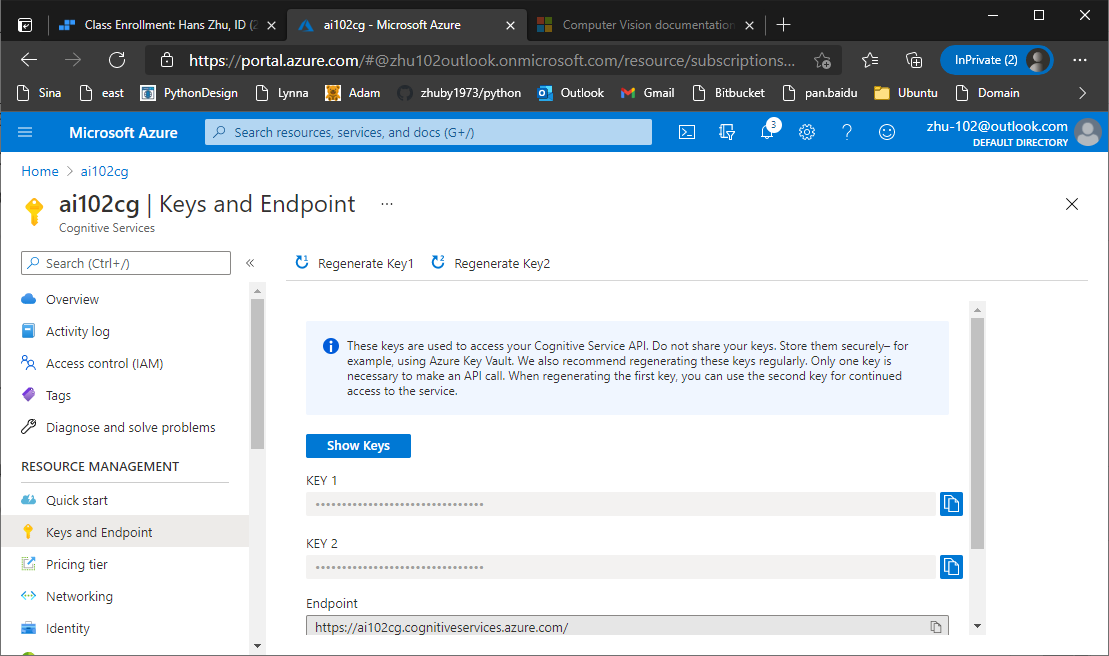

Step 5. Create an Azure application registration

az login

az ad app create –display-name “TimeBot” –password “Super$ecretPassw0rd” –available-to-other-tenants

Step 6. Create Azure resources

az deployment group create –resource-group “YOUR_RESOURCE_GROUP” –template-file “deploymenttemplates/template-with-preexisting-rg.json” –parameters appId=”YOUR_APP_ID” appSecret=”Super$ecretPassw0rd” botId=”A_UNIQUE_BOT_ID” newWebAppName=”A_UNIQUE_WEB_APP_NAME” newAppServicePlanName=”A_UNIQUE_PLAN_NAME” appServicePlanLocation=”REGION” –name “A_UNIQUE_SERVICE_NAME”

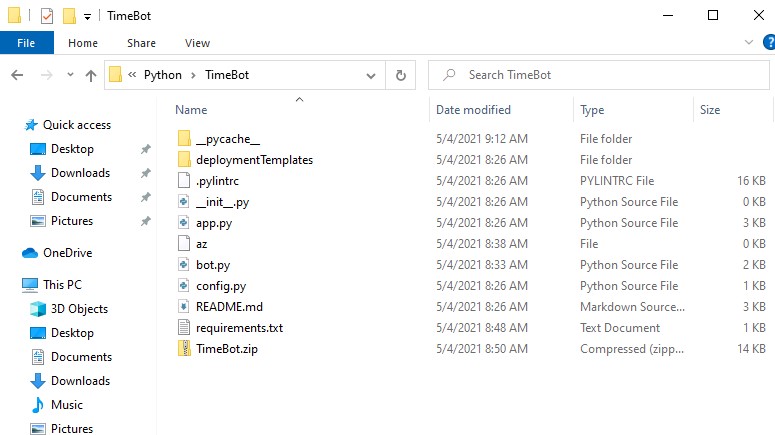

Step 7. Create a zip archive for deployment

select all of the files in the TimeBot folder to TimeBot.zip.

Step 8. Deploy and test the bot in Azure Portal

az webapp deployment source config-zip –resource-group “YOUR_RESOURCE_GROUP” –name “YOUR_WEB_APP_NAME” –src “TimeBot.zip”

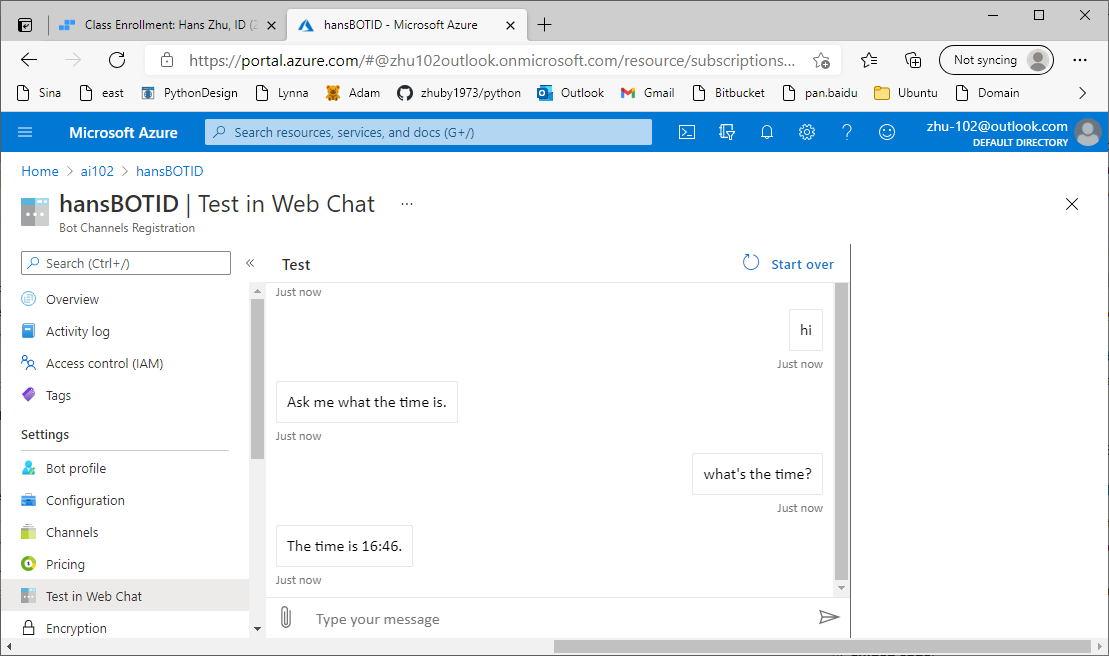

In the Azure portal, in the resource group containing your resources, open the Bot Channels Registration resource (which will have the name you assigned to the BotId parameter when creating Azure resources).

In the Bot management section, select Test in Web Chat. Then wait for your bot to initialize.

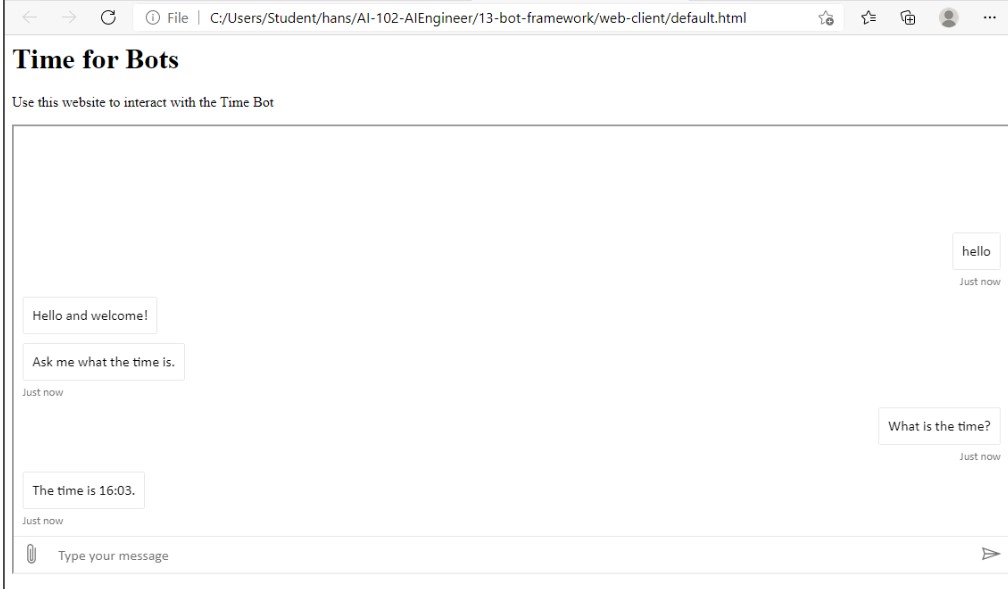

Enter a message such as Hello and view the response from the bot, which should be Ask me what the time is.

Enter What is the time? and view the response.

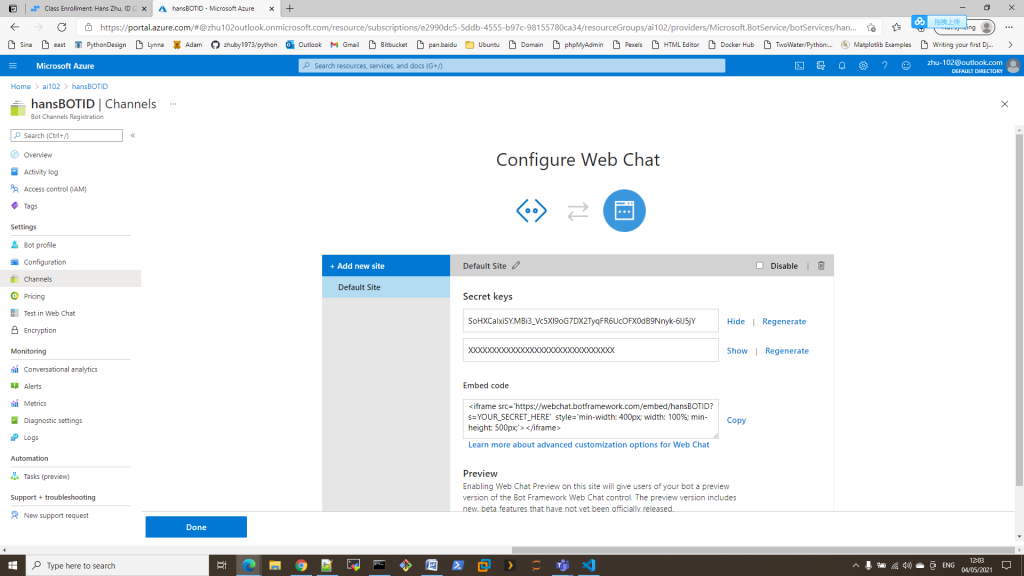

Step 9. Get the Web Chat channel info from Azure Portal

Step 10. Update 13-bot-framework/web-client/default.html with Embed code and Secret Key

<head>

<title>Time for Bots</title>

</head>

<Body>

<h1>Time for Bots</h1>

<p>Use this website to interact with the Time Bot</p>

<!-- add the iframe for the bot here-->

<iframe src='https://webchat.botframework.com/embed/hansBOTID?s=SoHXCaIxiSY.MBi3_Vc5Xl9oG7DX2TyqFR6UcOFX0dB9Nnyk-6lJ5jY' style='min-width: 400px; width: 100%; min-height: 500px;'></iframe>

</Body>

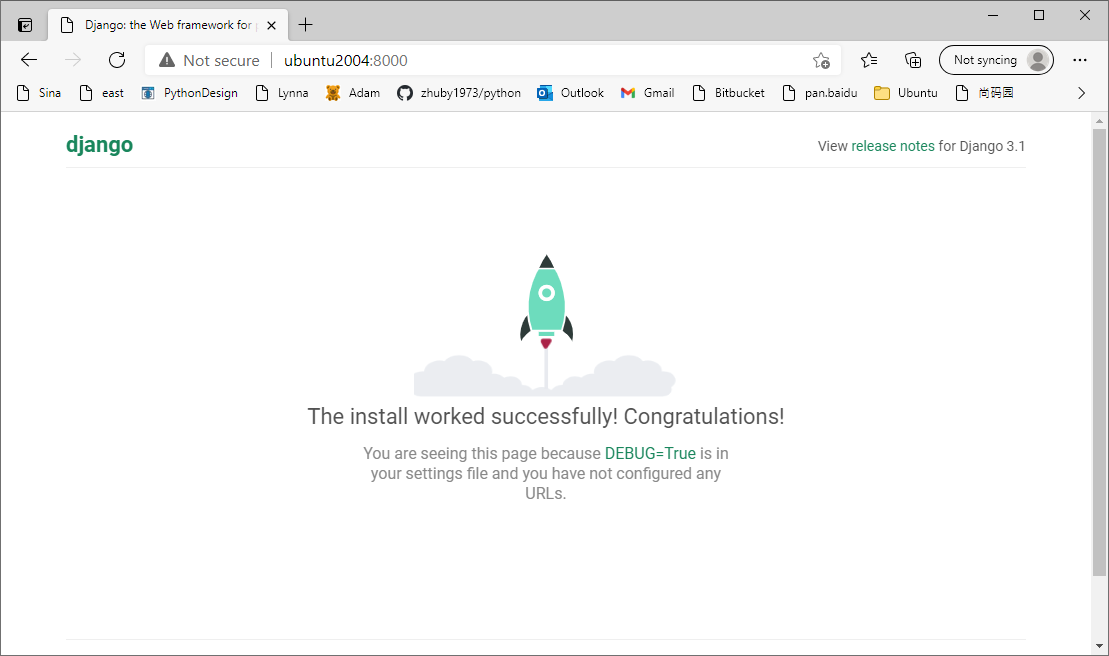

then you can open the html page in Edge and test the Bots service: