18 Places to Find Free Data Sets for Data Science Projects

If you’ve ever worked on a personal data science project, you’ve probably spent a lot of time browsing the internet looking for interesting data sets to analyze. It can be fun to sift through dozens of data sets to find the perfect one. But it can also be frustrating to download and import several csv files, only to realize that the data isn’t that interesting after all. Luckily, there are online repositories that curate data sets and (mostly) remove the uninteresting ones.

In this post, we’ll walk through several types of data science projects, including data visualization projects, data cleaning projects, and machine learning projects, and identify good places to find data sets for each. Whether you want to strengthen your data science portfolio by showing that you can visualize data well, or you have a spare few hours and want to practice your machine learning skills, we’ve got you covered.

Data Sets for Data Visualization Projects

A typical data visualization project might be something along the lines of “I want to make an infographic about how income varies across the different states in the US”. There are a few considerations to keep in mind when looking for good data for a data visualization project:

It shouldn’t be messy, because you don’t want to spend a lot of time cleaning data.

It should be nuanced and interesting enough to make charts about.

Ideally, each column should be well-explained, so the visualization is accurate.

The data set shouldn’t have too many rows or columns, so it’s easy to work with.

News sites that release their data publicly can be great places to find data sets for data visualization. They typically clean the data for you, and they often already have charts they’ve made that you can learn from, replicate, or improve.

- FiveThirtyEight

If you’re interested in data at all, you’ve almost certainly heard of FiveThirtyEight; it’s one of the best-established data journalism outlets in the world. They write interesting data-driven articles, like “Don’t blame a skills gap for lack of hiring in manufacturing” and “2016 NFL Predictions”.

What you may not know is that FiveThirtyEight also makes the data sets used in its articles available online on Github and on its own data portal.

Here are some examples:

Airline Safety — contains information on accidents from each airline.

US Weather History — historical weather data for the US.

Study Drugs — data on who’s taking Adderall in the US.

- BuzzFeed

BuzzFeed may have started as a purveyor of low-quality clickbait, but these days it also does high-quality data journalism. And, much like FiveThirtyEight, it publishes some of its datasets publicly on its Github page.

Here are some examples:

Federal Surveillance Planes — contains data on planes used for domestic surveillance.

Zika Virus — data about the geography of the Zika virus outbreak.

Firearm background checks — data on background checks of people attempting to buy firearms.

- ProPublica

ProPublica is a nonprofit investigative reporting outlet that publishes data journalism on focused on issues of public interest, primarily in the US. They maintain a data store that hosts quite a few free data sets in addition to some paid ones (scroll down on that page to get past the paid ones). Many of them are actively maintained and frequently updated. ProPublica also offers five data-related APIs, four of which are accessible for free.

Here are some examples:

Political advertisements on Facebook — a free collection of data about Facebook ads that is updated daily.

Hate crime news — regularly-updated data about hate crimes reported in Google News.

Voting machine age — data on the age of voting machines that were used in the 2016 election.

Data sets for Data Processing Projects

Sometimes you just want to work with a large data set. The end result doesn’t matter as much as the process of reading in and analyzing the data. You might use tools like Spark (which you can learn in our Spark course) or Hadoop to distribute the processing across multiple nodes. Things to keep in mind when looking for a good data processing data set:

The cleaner the data, the better — cleaning a large data set can be very time consuming.

There should be an interesting question that can be answered with the data.

Cloud hosting providers like Amazon and Google are good places to find big data sets. They have an incentive to host data, because they can make you analyze that data using their infrastructure (and thus pay them).

- AWS Public Data sets

Amazon makes large data sets available on its Amazon Web Services platform. You can download the data and work with it on your own computer, or analyze the data in the cloud using EC2 and Hadoop via EMR.

You can read more about how the program works here, and check out the data sets for yourself here (although you’ll need a free AWS account first).

Here are some examples:

Lists of n-grams from Google Books — common words and groups of words from a huge set of books.

Common Crawl Corpus — data from a crawl of over 5 billion web pages.

Landsat images — moderate resolution satellite images of the surface of the Earth.

- Google Public Data sets

Google also has a cloud hosting service, which is called Google Cloud. With Google Cloud, you can use a tool called BigQuery to explore large data sets.

Google lists all of the data sets on this page. You’ll need to sign up for a Google Cloud account to see it, but the first 1TB of queries you make each month are free, so as long as you’re careful, you won’t have to pay anything.

Here are some examples:

USA Names — contains all Social Security name applications in the US, from 1879 to 2015.

Github Activity — contains all public activity on over 2.8 million public Github repositories.

Historical Weather — data from 9000 NOAA weather stations from 1929 to 2016.

- Wikipedia

Wikipedia is a free, online, community-edited encyclopedia. It contains an astonishing breadth of knowledge, containing pages on everything from the Ottoman-Habsburg Wars to Leonard Nimoy. As part of Wikipedia’s commitment to advancing knowledge, they offer all of their content for free, and regularly generate dumps of all the articles on the site. Additionally, Wikipedia offers edit history and activity data, so you can track how a page on a topic evolves over time, and who contributes to it.

Methods and a how-to guide for downloading the data are available here.

Here are some examples:

All images and other media from Wikipedia — all the images and other media files on Wikipedia.

Full site dumps — of the content on Wikipedia, in various formats.

Data Sets for Machine Learning Projects

When you’re working on a machine learning project, you want to be able to predict a column using information from the other columns of a data set. In order to be able to do this, we need to make sure that:

The data set isn’t too messy — if it is, we’ll spend all of our time cleaning the data.

There’s an interesting target column to make predictions for.

The other variables have some explanatory power for the target column.

There are a few online repositories of data sets curated specifically for machine learning. These data sets are typically cleaned up beforehand, and allow for testing algorithms very quickly.

- Kaggle

Kaggle is a data science community that hosts machine learning competitions. There are a variety of externally-contributed interesting data sets on the site. Kaggle has both live and historical competitions. You can download data for either, but you have to sign up for Kaggle and accept the terms of service for the competition.

You can download data from Kaggle by entering a competition. Each competition has its own associated data set. There are also user-contributed data sets available here, though these may be less well cleaned and curated than the data sets used for competitions.

Here are some examples:

Satellite Photograph Order — a set of satellite photos of Earth — the goal is to predict which photos were taken earlier than others.

Manufacturing Process Failures — a collection of variables that were measured during the manufacturing process. The goal is to predict faults with the manufacturing.

Multiple Choice Questions — a data set of multiple choice questions and the corresponding correct answers. The goal is to predict the answer for any given question.

- UCI Machine Learning Repository

The UCI Machine Learning Repository is one of the oldest sources of data sets on the web. Although the data sets are user-contributed, and thus have varying levels of documentation and cleanliness, the vast majority are clean and ready for machine learning to be applied. UCI is a great first stop when looking for interesting data sets.

You can download data directly from the UCI Machine Learning repository, without registration. These data sets tend to be fairly small, and don’t have a lot of nuance, but they’re great for machine learning

Here are some examples:

Email spam — contains emails, along with a label of whether or not they’re spam.

Wine classification — contains various attributes of 178 different wines.

Solar flares — attributes of solar flares, useful for predicting characteristics of flares.

- Quandl

Quandl is a repository of economic and financial data. Some of this information is free, but many data sets require purchase. Quandl is useful for building models to predict economic indicators or stock prices. Due to the large amount of available data, it’s possible to build a complex model that uses many data sets to predict values in another.

Here are some examples:

Entrepreneurial activity by race and other factors — contains data from the Kauffman foundation on entrepreneurs in the US.

Chinese macroeconomic data — indicators of Chinese economic health.

US Federal Reserve data — US economic indicators, from the Federal Reserve.

Data Sets for Data Cleaning Projects

Sometimes, it can be very satisfying to take a data set spread across multiple files, clean it up, condense it all into a single file, and then do some analysis. In data cleaning projects, it can take hours of research to figure out what each column in the data set means. It may turn out that the data set you’re analyzing isn’t really suitable for what you’re trying to do, and you’ll need to start over.

That can be frustrating, but it’s a common part of every data science job, and it requires practice.

When looking for a good data set for a data cleaning project, you want it to:

Be spread over multiple files.

Have a lot of nuance, and many possible angles to take.

Require a good amount of research to understand.

Be as “real-world” as possible.

These types of data sets are typically found on websites that collect and aggregate data sets. These aggregators tend to have data sets from multiple sources, without much curation. In this case, that’s a good thing — too much curation gives us overly neat data sets that are hard to do extensive cleaning on.

- data.world

Data.world is a user-driven data collection site (among other things) where you can search for, copy, analyze, and download data sets. You can also upload your own data to data.world and use it to collaborate with others.

The site includes some key tools that make working with data from the browser easier. You can write SQL queries within the site interface to explore data and join multiple data sets. They also have SDKs for R and Python that make it easier to acquire and work with data in your tool of choice (and you might be interested in reading our tutorial on using the data.world Python SDK.)

All of the data is accessible from the main site, but you’ll need to create an account, log in, and then search for the data you’d like.

Here are some examples:

Climate Change Data — a large set of climate change data from the World Bank.

European soccer data — data on soccer/football in 11 European countries from 2008-2016.

Big Cities Health — health data for major cities in the US.

- Data.gov

Data.gov an aggregator of public data sets from a variety of US government agencies, as part of a broader push towards more open government. Data can range from government budgets to school performance scores. Much of the data requires additional research, and it can sometimes be hard to figure out which data set is the “correct” version. Anyone can download the data, although some data sets will ask you to jump through additional hoops, like agreeing to licensing agreements before downloading.

You can browse the data sets on Data.gov directly, without registering. You can browse by topic area, or search for a specific data set.

Here are some examples:

Food Environment Atlas — contains data on how local food choices affect diet in the US.

School System Finances — a survey of the finances of school systems in the US.

- The World Bank

The World Bank is a global development organization that offers loans and advice to developing countries. The World Bank regularly funds programs in developing countries, then gathers data to monitor the success of these programs.

You can browse world bank data sets directly, without registering. The data sets have many missing values (which is great for cleaning practice), and it sometimes takes several clicks to actually get to data.

Here are some examples:

World Development Indicators — contains country level information on development.

Educational Statistics — data on education by country.

World Bank project Costs — data on World Bank projects and their corresponding costs.

- /r/datasets

Reddit, a popular community discussion site, has a section devoted to sharing interesting data sets. It’s called the datasets subreddit, or /r/datasets. The scope and quality of these data sets varies a lot, since they’re all user-submitted, but they are often very interesting and nuanced.

You can browse the subreddit here without an account (although a free account will be required to comment or submit data sets yourself). You can also see the most highly-upvoted data sets of all time here.

Here are some examples:

All Reddit Submissions — contains reddit submissions through 2015.

Jeopardy Questions — questions and point values from the gameshow Jeopardy.

New York City Property Tax Data — data about properties and assessed value in New York City.

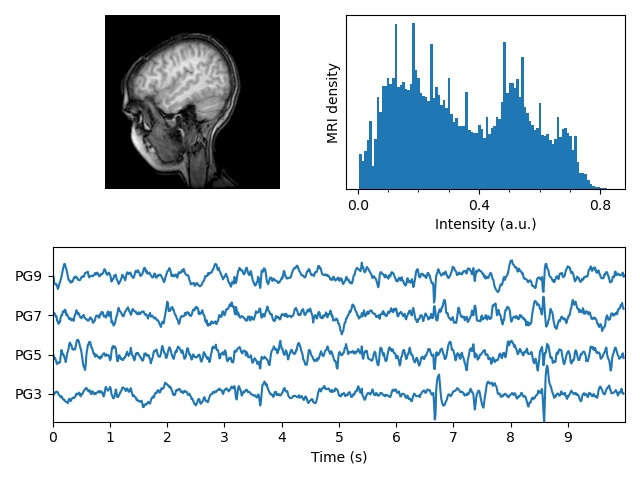

- Academic Torrents

Academic Torrents is data aggregator geared toward sharing the data sets from scientific papers. It has all sorts of interesting (and often massive) data sets, although it can sometimes be difficult to get context on a particular data set without reading the original paper and/or having some expertise in the relevant domains of science.

You can browse the data sets directly on the site. Since it’s a torrent site, all of the data sets can be immediately downloaded, but you’ll need a Bittorrent client. Deluge is a good free option that’s available for Windows, Mac, and Linux.

Here are some examples:

Enron Emails — a set of many emails from executives at Enron, a company that famously went bankrupt.

Student Learning Factors — a set of factors that measure and influence student learning.

News Articles — contains news article attributes and a target variable.

Bonus: Streaming data

When you’re building a data science project, it’s very common to download a data set and then process it.

However, as online services generate more and more data, an increasing amount is available in real-time, and not available in downloadable data set form. Some examples of this include data on tweets from Twitter and stock price data. There aren’t many good sources to acquire this kind of data in downloadable form, and a downloadable file would be quickly out of date anyway. Instead, this data is often available in real time as streaming data, via an API.

Here are a few good streaming data sources in case you want to try your hand at a streaming data project.

- Twitter

Twitter has a good streaming API, and makes it relatively straightforward to filter and stream tweets. You can get started here. There are tons of options here — you could figure out what states are the happiest, or which countries use the most complex language. If you’d like some help getting started working with this Twitter API, check out our tutorial here.

- Github

GitHub has an API that allows you to access repository activity and code. You can get started with the API here. The options are endless — you could build a system to automatically score code quality, or figure out how code evolves over time in large projects.

- Quantopian

Quantopian is a site where you can develop, test, and optimize stock trading algorithms. In order to help you do that, the site gives you access to free minute-by-minute stock price data, which you can use to build a stock price prediction algorithm.

- Wunderground

Wunderground has an API for weather forecasts that is free up to 500 API calls per day. You could use these calls to build up a set of historical weather data, and then use that to make predictions about the weather tomorrow.